AI & Data Science

AI & Data Science

Microsoft AR Copilot Exploration

My rendition of the Microsoft AR Copilot experience.

Software: Unity / 3Ds Max / Figma / Photoshop / After Effects / Audacity / Microsoft Speech Studio

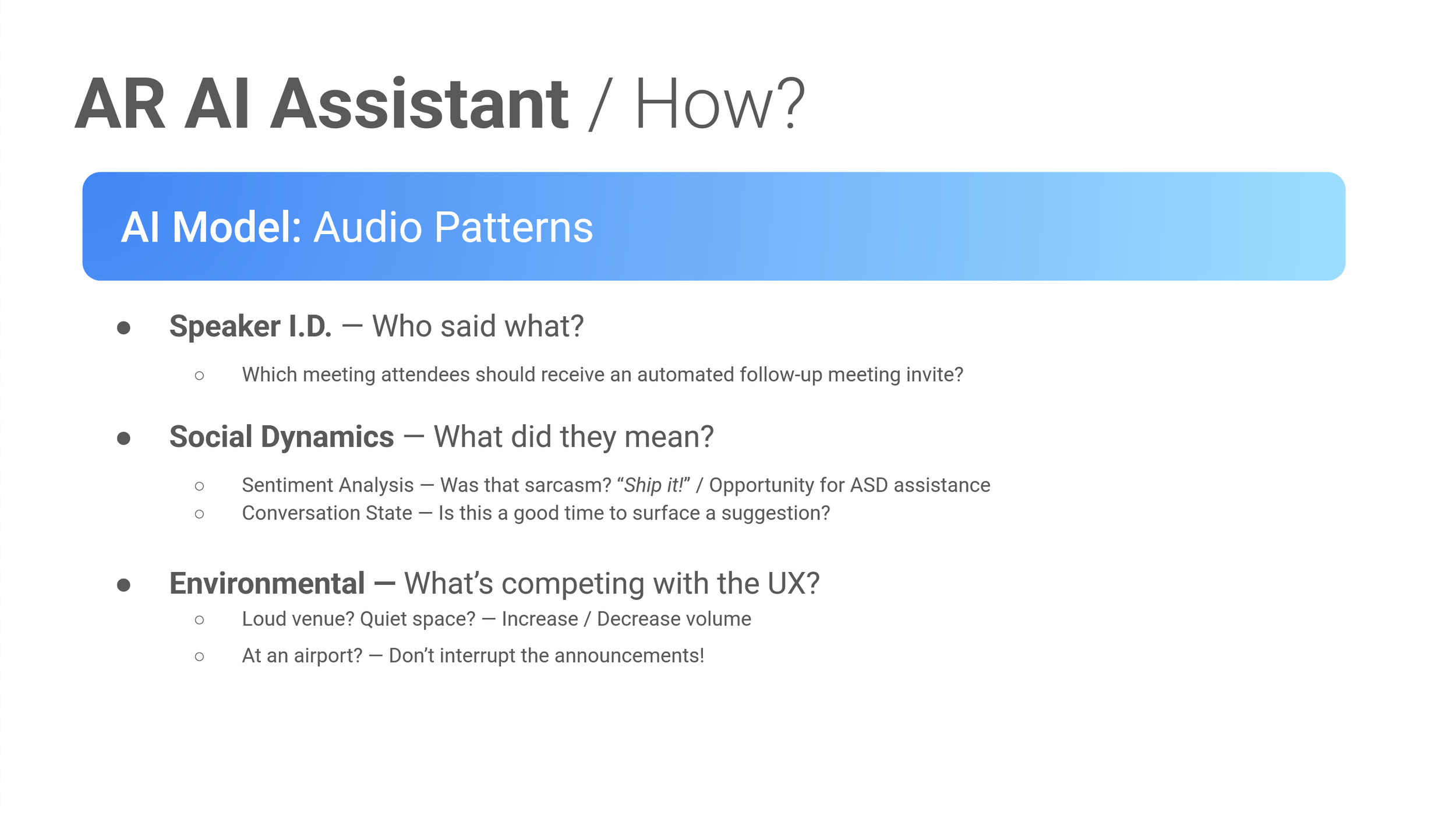

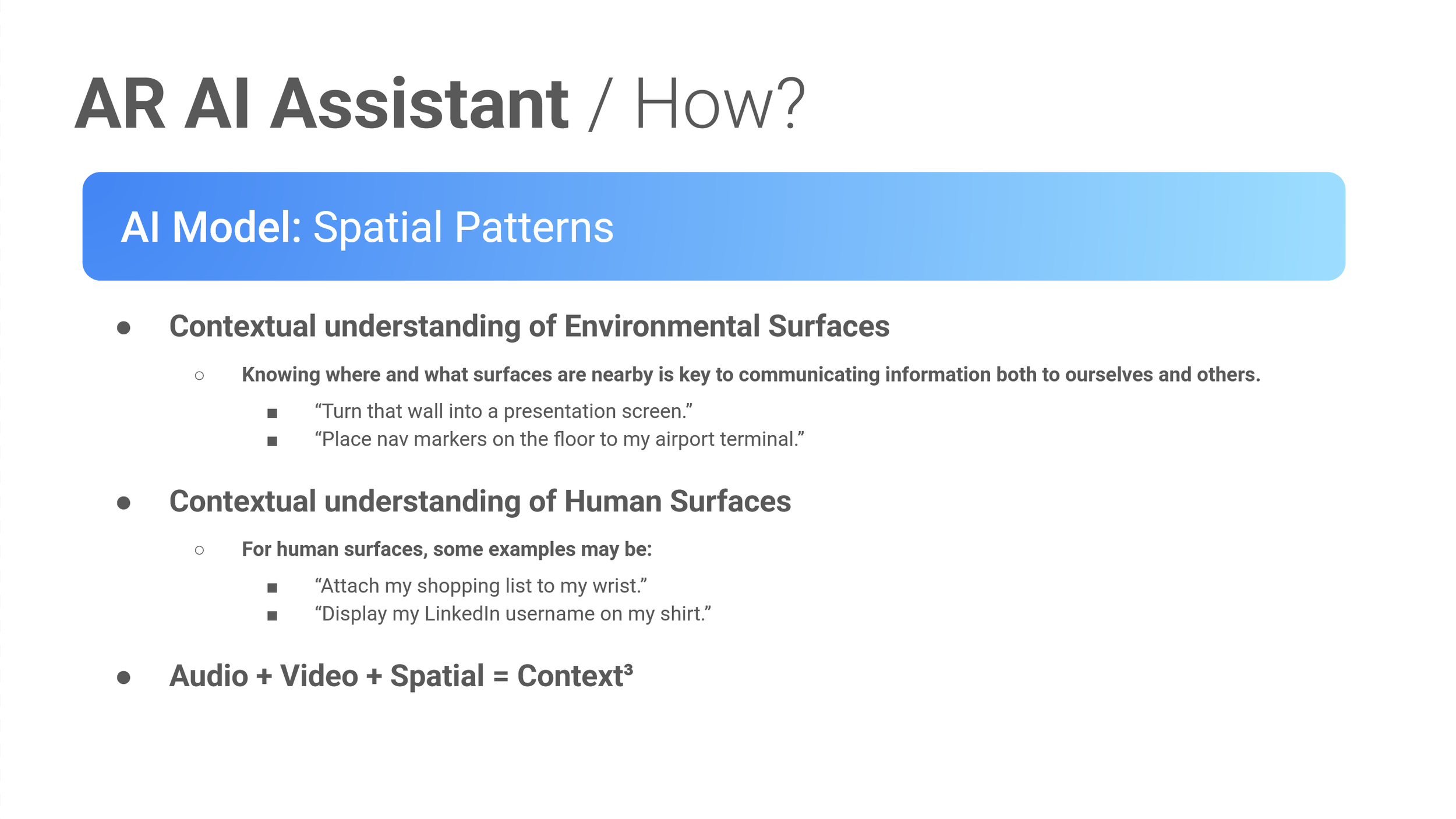

Below: A collection of (some of the) slides I authored while exploring the intersection of an augmented reality AI assistant and the underlying AI models which could be employed to develop the ideal spatial AI assistant. These slides are a good example of how understanding hardware and software capabilities allow extrapolation of novel UX patterns.

Sample Ideation exercise

Below is a collection of ideas I produced for improving the safety and efficiency of collecting and managing law enforcement evidence using AI/ML/CV models. The singular goal of this exercise was to demonstrate strategy, vision and analytical skills.

1) A/V Data Collection + AI Inference

Auto-labeling of subject ID:

Allows the bodycam to utilize facial recognition to passively query criminal photo databases, which, when coupled with high priority alerts (warrants etc.) can surface information to the officer at the time of need, improving situational awareness in real-time.

Audio capture of voices matched to subject ID — ground truth established by video of the individual while speaking:

Enriches transcription and positively identifies out-of-frame speakers.

OCR trained against individual officer handwriting (for those LEA who prefer handwriting):

Officer handwrites “The quick brown fox jumps over the lazy dog”, then captured by camera.

Allows field notes to be reliably transcribed to standardized digital formats and LEA forms.

ML models for optical classification of captured media:

Shoe print registry / tire mark registry / tool mark registry, automotive paint color registry, etc.

Continuously running as a background process, ingesting new registry entries while looking for matches to unresolved cases, and upon a match, sending a notification to all law enforcement personnel associated with the case for human review.

ML models for matching suspect gait:

Gait can be a reliable ID — body, vehicle or CCTV camera feeds automatically and continuously comparing video of individuals’ gaits cross-referenced with persons of interest.

Auto-labeling of scene objects to aid evidence retrieval:

Allows for queries such as "Find video assets related to the recent hit-and-run with a red pickup."

2) Asset Standardization + AI Interoperability:

Text / Video / Audio encoders:

Procedural operations homogenize all rich media evidence for interoperability between LEA RMS implementations

Transform documentation from all police precincts into a universal format for both LEA & AI models:

Background or in-situ scanning of documents / screens / evidence bags / video → CV subject and object ID predictions → text / labels / metadata extracted → SLM/LLM preprompt instructions to identify keywords / fields of interest (e.g. "VIN", "Charge", "Age”) to then determine appropriate universal form type (evidence chain of custody, traffic stop, etc)

Personnel-in-the-loop approval ✓

If other agencies use alternative records management systems and are not able or willing to adopt modify an existing LLM transformer to passively ingest their evidence and make it available to the ecosystem.

3) Asset Discoverability & Insights:

Database matching officer number and name to accelerate communication and asset retrieval

Allows for queries such as “connect me with detective Nguyen at the North Seattle precinct”.

Natural language processing in concert with LLM to decrease retrieval time

"Pull up my traffic stops from last Thursday on Aurora Ave"

“Connect me with the detective assigned to the hit-and-run case I filed in Q4 of 2022.”

“4 matches found, do you recall any other details of the case?”

“I believe there was a blue sedan involved.”

“Match found. Detective Marcus Johnson — connecting now.”

Recommendation algorithm to find similar or directly related assets, e.g. case files of subject ID

Upon reaching an acceptable confidence interval of subject ID, notification is sent to LEA personnel to review insights.

LLM parsers independently query the evidence database to provide recommended COAs:

Essentially, LLMs + ML models = continuous background processing of evidence to extrapolate missed-connections between cases, persons of interest, police and prosecution personnel, and evidence, 24/7 without human input (obviously compute is a concern).

LLM multi-disciplinary bot army:

IBM recently unveiled a multi-disciplinary multi-agent approach to resolving complex problems (e.g. an LLM instructed to evaluate problems from a physicist agent, then ask the same of a mathematician agent, then instruct a higher level agent to solve the original problem using the insights from both the physics and mathematician agents.

This may come in the form of:

Officer agent

CSI agent

Records conformance agent

Rights conformance agent

Paralegal agent

County Clerk agent

Criminal defense attorney agent

Prosecutorial agent

Judge agent

Upon ingestion of insights from each agent, an executive summary of insights and recommended courses of action is produced with significantly improved accuracy and logic compared to a single universal agent.

Reveal previously unforeseen issues with case files — evidence chain of custody, clerical errors, etc.

Reveal historical case precedence insights

Case strategy recommendations

Attorney / judge profile generation to anticipate rulings based on previous similar cases (feeds into case strategy)

4) Data Sharing:

Inter-agency "Follow-Up Service" to recursively identify assets which should be shared with other agencies or officers with historical relevance.

E.g. Another agency or officer was part of a previous case involving the individual I stopped in traffic today — this information is sent as a notification to the relevant parties.

AR/VR playback of crime scene investigation:

CSI sets up a few off-the-shelf RGB + Depth cameras at perimeter of scene, scene origin offsets of each camera position and orientation allows for “stitching” of RGB + depth data (think point cloud), then playback on AR/VR headset to recreate a traversable spatial re-creation of the entire scene investigation.

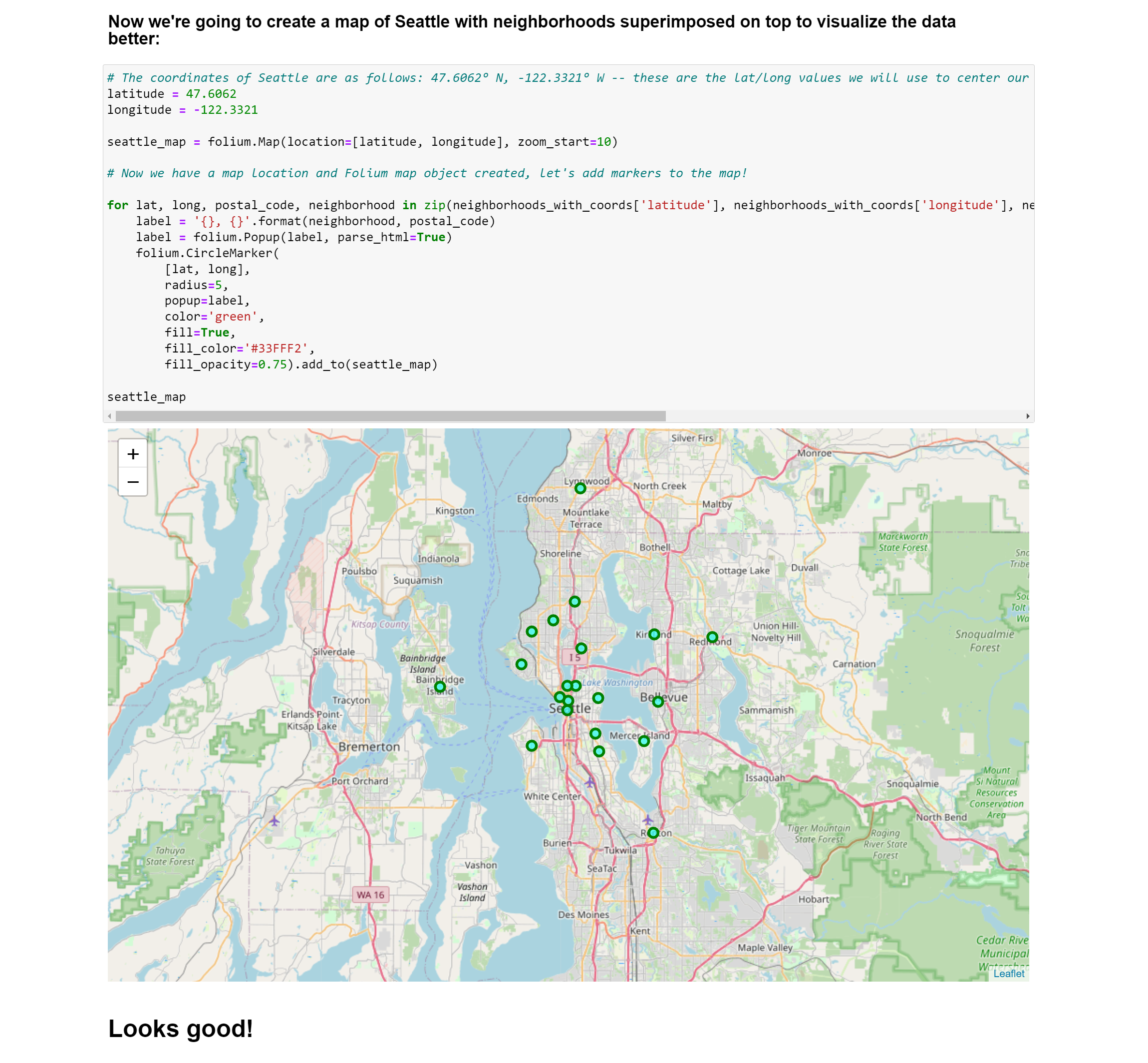

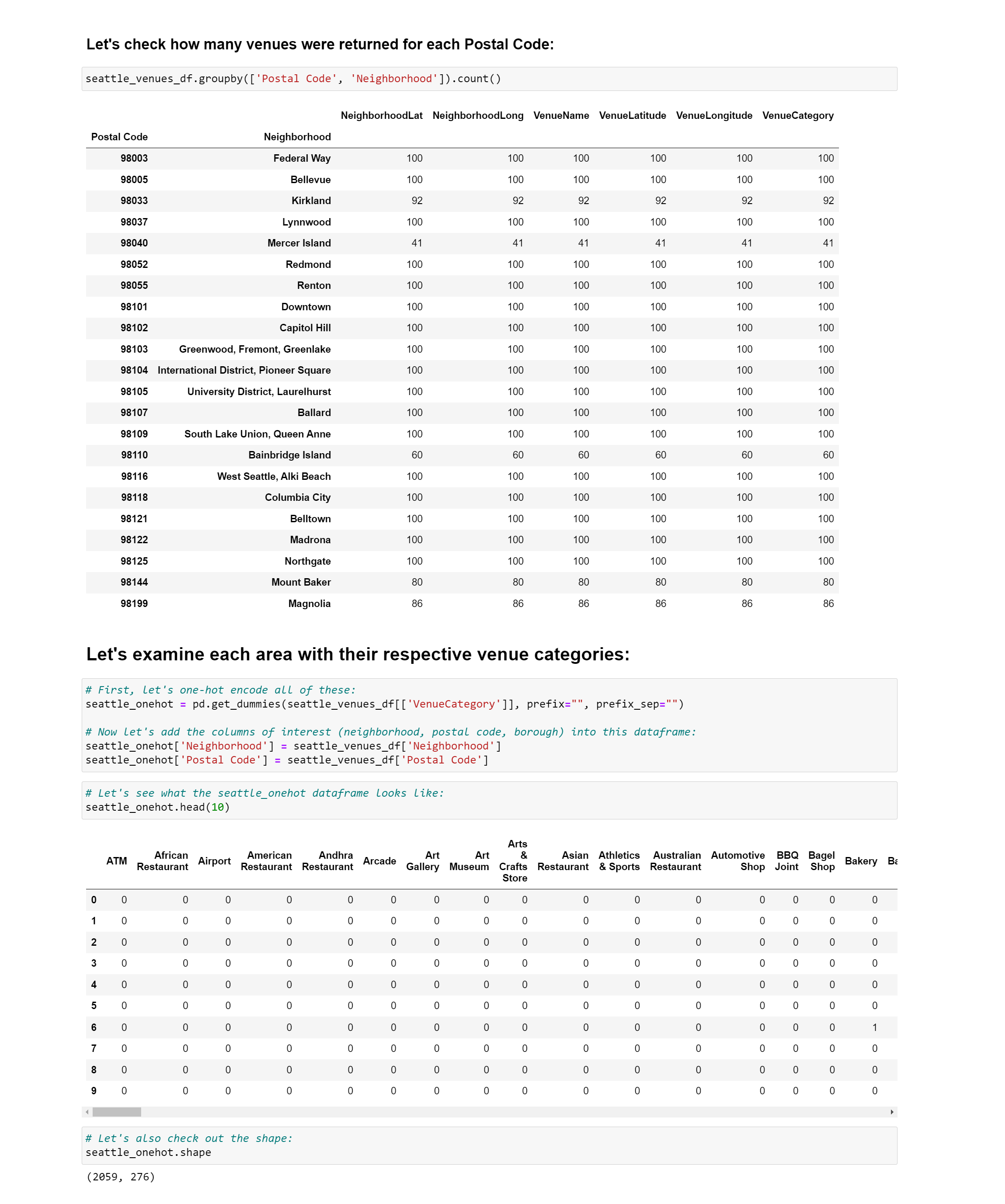

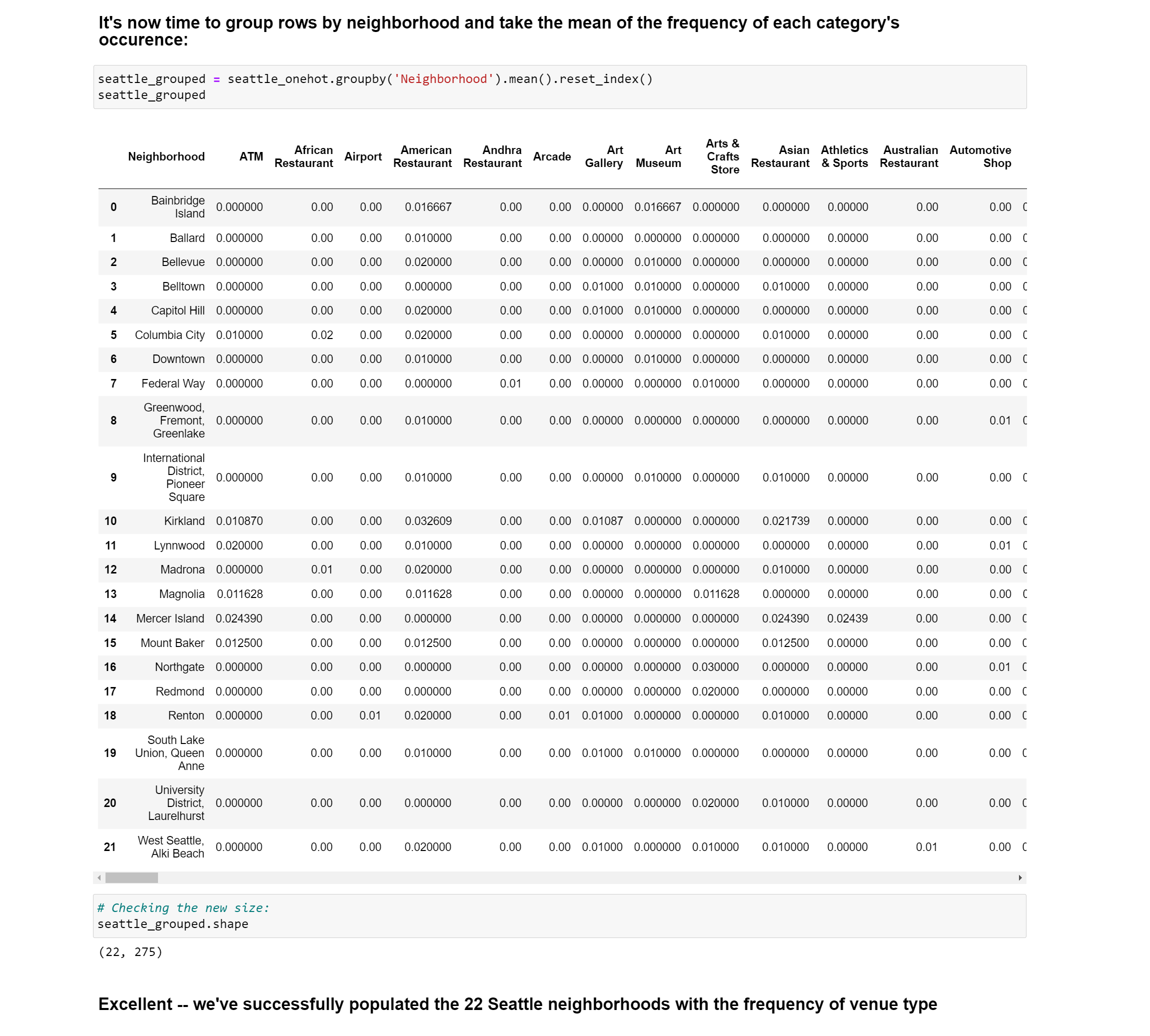

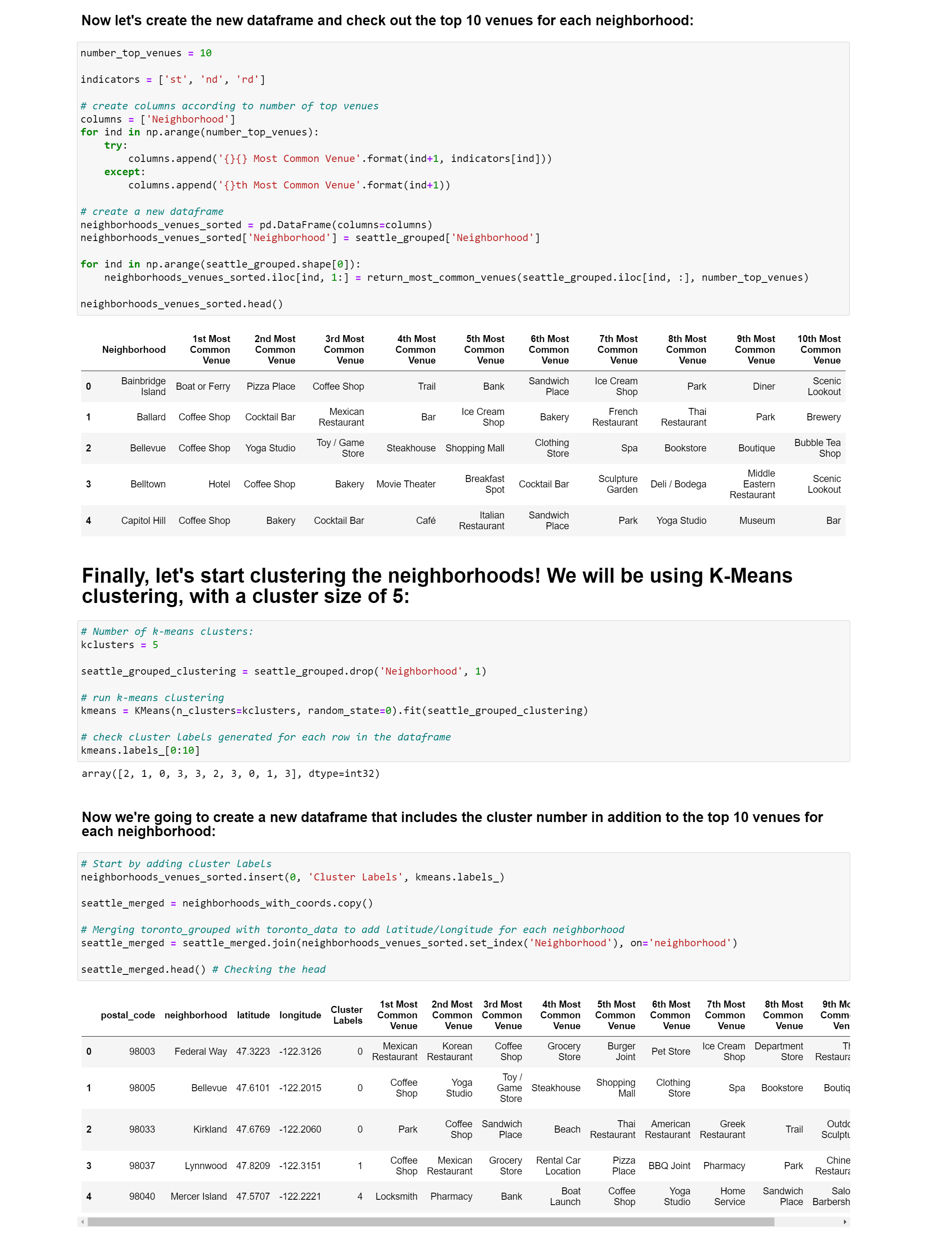

IBM Data Science Professional Certificate

Below you will find my IBM Data Science Professional Cert capstone project. It does not come close to capturing everything I learned in the program, in particular it lacks prediction models which I found to be the most fascinating of the coursework. I was surprised to find myself doing the math on paper for fun. I may post those efforts in the near future as I am particularly interested in the intersection of Product Design, Prediction Models and LLMs.

Credly Verification: https://www.credly.com/users/jeremy-romanowski

Github URL: https://github.com/jeremyromanowski/IBM_DataScienceCert_Capstone